Expert Panel Reaffirms Beta-blockers as First-Line Therapy for Hypertension in India

India: An expert panel in India came together to develop a consensus on the role of beta-blockers in managing hypertension. The Indian consensus, published in the Journal of the Association of Physicians of India (JAPI), developed graded recommendations regarding the clinical role of beta-blockers in managing hypertension (HTN), HTN with additional cardiovascular (CV) risk, and type 2 diabetes mellitus (T2DM).

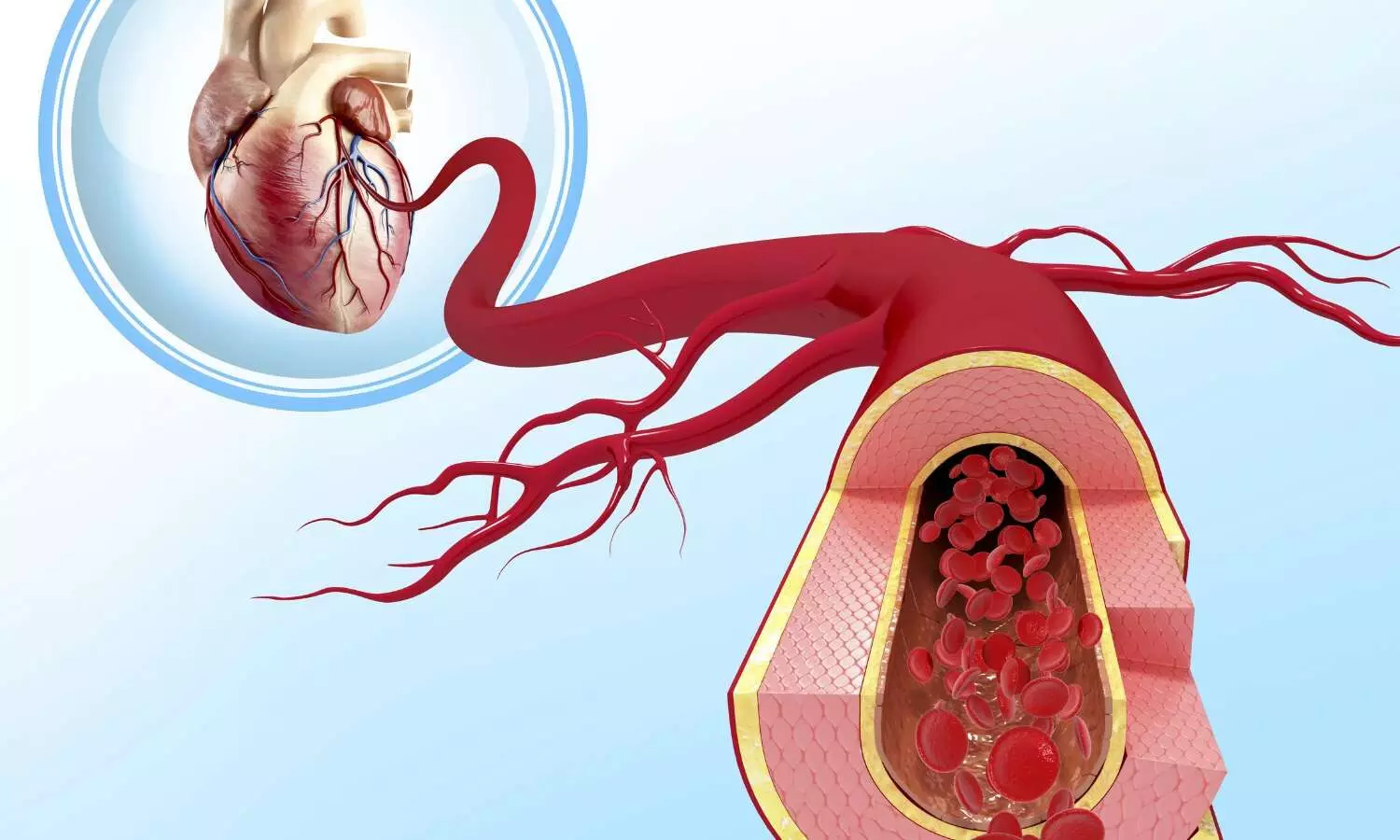

Hypertension, a condition affecting millions worldwide, remains a significant health challenge, especially in India, where early cardiovascular disease (CVD) is a growing concern. Despite advances in treatment, the management of essential hypertension continues to be difficult, with control achieved in fewer than 1 in 10 cases, particularly when aligned with updated guidelines from the American College of Cardiology (ACC) and the International Society of Hypertension (ISH). In response to these challenges, the positioning of beta-blockers, particularly nebivolol, has evolved, with a focus on their relevance to the Indian population’s unique characteristics, including premature CVD, fragile coronary architecture, and high resting heart rates.

The panel, comprising clinical and interventional cardiologists, synthesized current evidence to provide graded recommendations based on guidelines, including the 2023 updates of the European Society of Hypertension (ESH). The Indian consensus aims to clarify the clinical role of beta-blockers in treating hypertension, particularly when compounded by comorbid conditions such as T2DM and cardiovascular risk factors.

The consensus findings emphasize the pivotal role of beta-blockers, including nebivolol, in hypertension management. An overwhelming 94% of the panelists agreed that the 2023 ESH hypertension guidelines have increased confidence in the use of beta-blockers as a first-line therapy for HTN. In particular, beta-blockers are recommended for individuals with hypertension who exhibit high resting heart rates, such as younger hypertensive patients under the age of 40. Moreover, for those under 60 years old, regardless of comorbid conditions, beta-blockers are considered the preferred drug choice.

For hypertensive patients with T2DM, 95% of the experts favored nebivolol due to its beneficial effects in managing both hypertension and diabetes. Nebivolol was also deemed the most suitable beta-blocker for patients with angina, with metoprolol and bisoprolol following closely behind in preference. In patients with chronic obstructive pulmonary disease (COPD) and hypertension, nebivolol was once again the most preferred option, as it tends to cause fewer pulmonary side effects compared to other beta-blockers.

The study also highlighted the importance of combining nebivolol with angiotensin receptor blockers (ARBs) in patients who do not respond well to ARB monotherapy. This combination therapy was shown to be particularly effective for hypertensive patients with diabetes and those suffering from ischemic heart disease (IHD), such as those presenting with angina or myocardial infarction (MI).

Consensus on the Role of Beta-blockers in Hypertension

Treatment

-

First-line

TreatmentBeta-blockers are classified as first-line drugs for the treatment of

hypertension (HTN).

- 94%

of respondents stated that the updated ESH HTN guidelines (2023) have

increased confidence in using beta-blockers for HTN management.

in Comorbid Conditions

patients with comorbid conditions such as myocardial infarction (MI),

heart failure with reduced ejection fraction (HFrEF), and atrial

fibrillation (AF).

- They

are also beneficial in patients with high resting heart rates, providing

additional protection.

Resting Heart Rates

resting heart rates, including younger hypertensives under the age of 40.

Heart Rate and Blood Pressure

of blood pressure and mortality risk.

- Beta-blockers

are recommended for patients under 60 years of age with HTN, regardless

of the presence of comorbid diseases.

in Hypertensive Patients with Diabetes

with diabetes.

- Other

commonly preferred options include bisoprolol and metoprolol.

in COPD and HTN

obstructive pulmonary disease (COPD) and HTN due to its favorable side

effect profile.

+ ARB Combination

be considered in:

- Patients

who do not respond well to ARB monotherapy - Hypertensive

patients with diabetes - Patients

with HTN and ischemic heart disease (IHD), particularly those

experiencing angina or myocardial infarction (MI).

“The consensus firmly places beta-blockers, especially nebivolol, at the forefront of hypertension treatment in India. The findings support their use in various patient subsets, including those with high resting heart rates, younger hypertensives, and patients with comorbid conditions like T2DM and COPD. Given the unique health challenges in India, these updated recommendations provide a comprehensive approach to managing hypertension, ensuring better long-term outcomes for patients,” the researchers concluded.

Reference:

Mohan JC, Roy DG, Ray S, et al. Position of Beta-blockers in the Treatment of Hypertension Today: An Indian Consensus. J Assoc Physicians India 2024;72(10):83-90.

Powered by WPeMatico