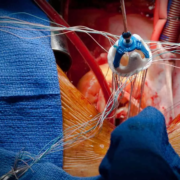

Early surgery for acute active infective endocarditis feasible soon after stroke, claims study

Researchers reported in a new study that early-valve surgery in acute infective endocarditis with preoperative stroke is feasible and non-inferior for postoperative stroke risk. This conclusion was drawn from a comprehensive review of an institutional database related to the timely surgical intervention can safely be considered in this high-risk population. The study was published by Kareem Wasef and colleagues in The Annals of Thoracic Surgery.

Acute infective endocarditis is usually associated with serious complications like acute stroke. The appropriate timing of valve repair or replacement in these patients is controversial because an increased incidence of hemorrhagic conversion has been reported in these patients. The optimal timing for surgery greatly influences the outcome of the patients. The present study evaluated the outcome of early valve surgery in comparison with delayed surgery among patients who had a preoperative stroke caused by acute endocarditis.

This was a retrospective review using an institutional Society of Thoracic Surgeons database including all patients who underwent valve surgery for active infective endocarditis from 2016 to 2024. Electronic medical records provided stroke details and longitudinal follow-up. Descriptive statistics and Kaplan-Meier survival curves assessed outcomes and survival rates.

Results

This research involved 656 patients who underwent surgery because of acute active infective endocarditis. Of these, preoperative stroke happened with 98 patients, which is 14.9%: 86 patients (87.8%) developed embolic strokes, 16 (18.6%) developed micro-hemorrhages, and 12 (12.2%) developed hemorrhagic strokes. The mean time from the diagnosis of preoperative stroke to surgery was 5.5 days.

• The overall postoperative stroke incidence was 2.1%: 14 of 656 patients.

• No statistically significant difference was observed in postoperative stroke rates between patients with and those without a preoperative stroke (4.1% vs 1.8%, P = 0.148).

• There were more cases of postoperative hemorrhagic stroke in the preoperative stroke group as well, at a rate of 3.1% compared to 0.5% (.

• Early surgery (within 72 h) in patients with preoperative stroke did not increase the incidence of postoperative stroke (2.6% vs. 5.0%; p=0.564).

This study has therefore established that patients with acute infective endocarditis and who have had preoperative stroke are associated with similar outcomes to those without preoperative stroke. Although there is a higher incidence of postoperative hemorrhagic strokes among the preoperative stroke group, the risk of postoperative stroke did not differ significantly and thereby supported the feasibility of early surgical intervention.

These results are especially relevant for clinicians treating patients with endocarditis complicated by stroke, providing evidence that early surgery does not exacerbate postoperative stroke risk. The slightly higher risk of hemorrhagic conversion mandates serious patient selection and perioperative management.

This study demonstrated that early-valve surgery for acute endocarditis in patients with a preoperative stroke is feasible and safe. In particular, noninferior postoperative stroke risk was evidenced in this high-risk population, which supports early surgical intervention that may improve patient outcomes. Further studies are thus warranted to further refine the selection of patients and optimize the quality of perioperative care with the aim of reducing the risk of hemorrhagic conversion.

Reference:

Powered by WPeMatico